Introduction

In the lead-up to China’s 2025 International Workers’ Day celebration, a peculiar irony unfolded across the country’s AI landscape. While tech giants like Alibaba released Qwen 3, claiming superiority over OpenAI and Google’s offerings, and DeepSeek unveiled their new Prover V2 model, the very workers of these innovations were meant to celebrate found themselves caught in an unprecedented digital contradiction.

China has developed and enforced some of the most extensive AI regulations globally to safeguard workers from algorithmic exploitation, all the while implementing AI systems that systematically suppress conversations regarding worker rights and collective action. This contradiction illustrates ‘algorithmic authoritarianism’, a governance framework wherein AI fulfils dual roles of protection and repression, establishing a controlled environment for worker safety while simultaneously obstructing avenues for authentic labour mobilisation. This article expands upon Mark Jia’s notion of ‘Authoritarian Privacy’ to analyse this contradiction through the lens of recent advancements in China’s AI landscape and to comprehend how authoritarian regimes leverage technological innovation not only to sustain control but also to legitimise that control through selective safeguarding.

Safeguard through Regulation

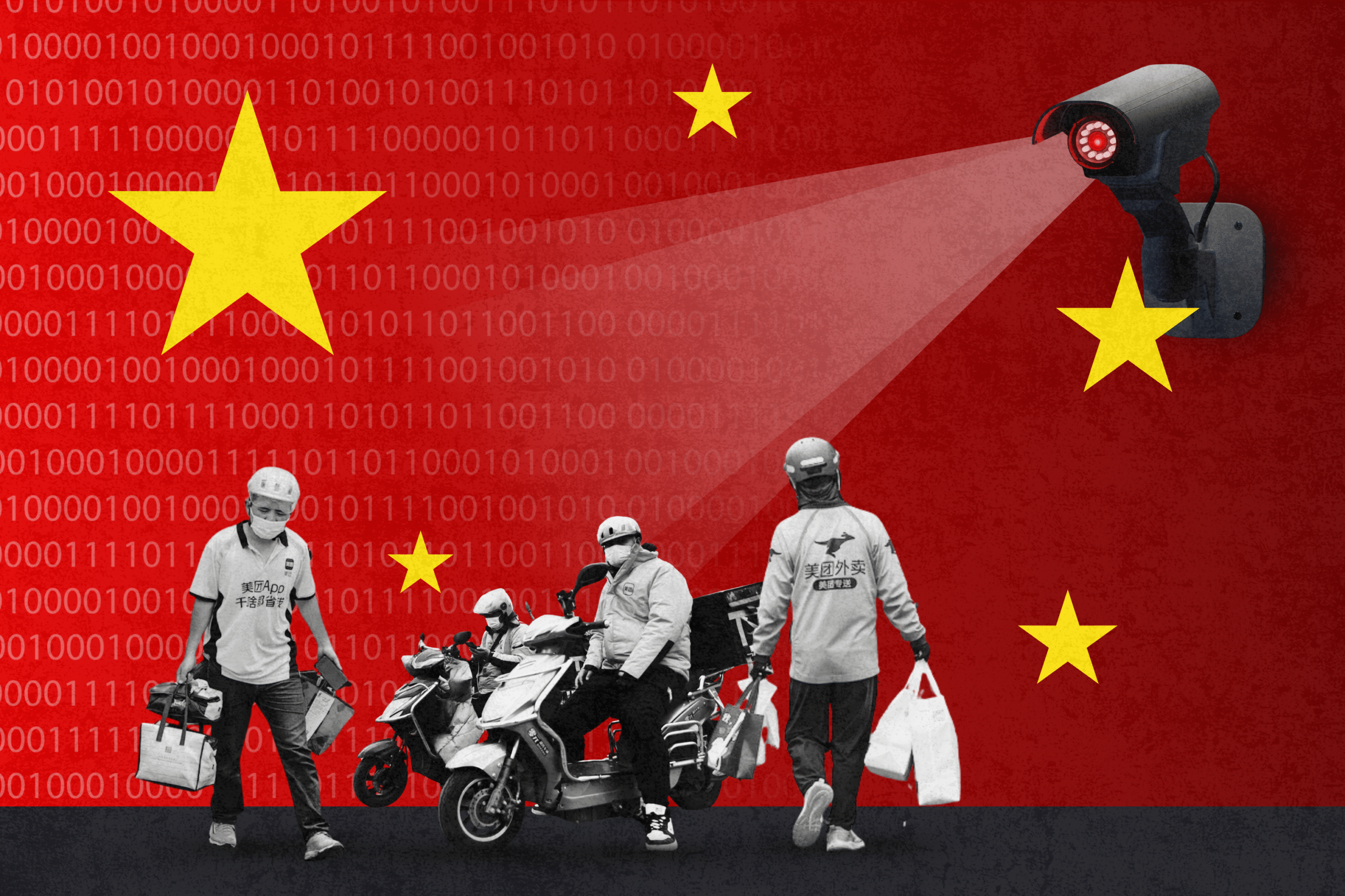

China’s regulatory stance on algorithmic labour management seems notably progressive at first glance. The “Internet Information Service Algorithmic Recommendation Management Provisions” regulation implemented in 2021 directly confronts algorithmic exploitation within the gig economy, stipulating that platforms are prohibited from employing algorithms to impose “unreasonable” delivery deadlines that jeopardise worker safety. These safeguards arose in response to documented instances of abuse within Chinese delivery services. Since 2020, the exploitation reported on platforms such as Meituan and Ele.me has garnered public scrutiny as delivery personnel face increasingly perilous working conditions. Between 2016 to 2019, under stringent algorithmic frameworks, average delivery times across the industry went down by 10 minutes, compelling rural migrant workers, who lacked formal contracts or insurance, to engage in dangerous races against algorithmic expectations. This led to many equating deliveries as ‘a race with Death, a competition with traffic cops, and a friendship with red lights.’ In this backdrop, the 2021 regulation came as a relief for the gig workers. Both Meituan and Ele.me loosened up their time requirements, providing some breathing space to delivery workers.

The Limits of Safeguard and AI as Political Gatekeepers

Yet, beyond these regulatory protections for algorithmic workers and addressing the social issues arising out of the proliferation of AI in various sectors of the economy, Beijing’s tolerance evaporated quickly when faced with direct workers’ mobilisation. Even prior to the Meituan and Ele.me investigations, workers had intensified strikes against platform exploitation and the leaders of these protests were often detained by the police rather than heard by the policymakers. This dual response, where the Chinese state makes a regulatory intervention to placate the gig workers while simultaneously cracking down on unauthorised collective action, holds an implicit message that worker protection flows from state benevolence, not grassroots organising.

The protective narrative further disintegrates upon scrutiny of how these AI systems engage in discussion regarding workers’ protests. In the first four months of 2025 alone, approximately 540 worker protests were documented by China Labor Bulletin, an NGO which was dissolved in June 2025, just two months ago. However, Chinese AI models consistently refute the existence of such protests. When asked about labour protests in China during 2025, DeepSeek’s initial response asserted that “China is a country governed by the rule of law, where the rights and interests of workers are highly protected by the government,” claiming that social harmony persists under the leadership of the Communist Party while dismissing the occurrence of widespread protests. It even warned against disseminating ‘false information’ when questioned in Mandarin. Alibaba’s recently launched Qwen 3 exhibited comparable tendencies, stating that “disturbing the social order was prohibited” when asked about labour protests.

This censorship does not stop here. China has begun integrating these AI platforms into its broader surveillance network. The launch of GoLaxy’s Comprehensive Social Listening System in February 2025, developed in collaboration with DeepSeek, signifies a notable progression in the methods by which authorities oversee online conversations. Some argue that, it will have a significant impact on labour-related content and discussion on apps like TikTok and Kuaishou, which are used by the working-class population. Even more concerning is the integration of AI into China’s traditional petition system, which has historically functioned as a safety valve for citizens to voice grievances against local governmental misconduct. It could lead to potential persecution of the very workers by the local authorities who seek to utilise official channels for resolving labour disputes.

To explain this dual function of simultaneous protection and oppression of workers’ rights by the Chinese state, this article extends Mark Jia’s conception of ‘Authoritarian Privacy ’ to China’s AI ecosystem. Jia’s framework explains the paradox of an authoritarian state having robust privacy laws. He argues that by legislating privacy laws, the state positions itself as the protector of citizens against private companies and local governments, thereby legitimising its own centralised control and surveillance.

In a manner similar to how the state safeguards citizens’ data from corporate entities to rationalise its political surveillance, it also shields workers from the exploitative practices of algorithms to legitimise its repression of collective action. Through the enactment of regulations such as the 2021 “Provisions” aimed at mitigating the excesses of platforms like Meituan and Ele.me, Beijing positions itself as a compassionate protector of the labour force. This portrayal of state-sponsored protection bolsters its legitimacy and mitigates criticism regarding its stringent crackdowns on unauthorised worker protests. It helps Beijing present itself as a benevolent guardian of the working class in the face of rising exploitation by the private companies and local governments. At the same time, the suppression of collective action by the workers highlights Beijing’s uneasiness with any potential threat which could challenge the CCP’s hold over power.

Conclusion

This portrayal of state-sponsored protection bolsters its legitimacy and mitigates criticism regarding its stringent crackdowns on unauthorised worker protests. It helps Beijing present itself as a benevolent guardian of the working class in the face of rising exploitation by the private companies and local governments. At the same time, the suppression of collective action by the workers highlights Beijing’s uneasiness with any potential threat which could challenge the CCP’s hold over power.